Cerebras Systems has announced Condor Galaxy, a network of nine interconnected supercomputers that offer a new approach to AI compute and promise to significantly reduce AI model training time.

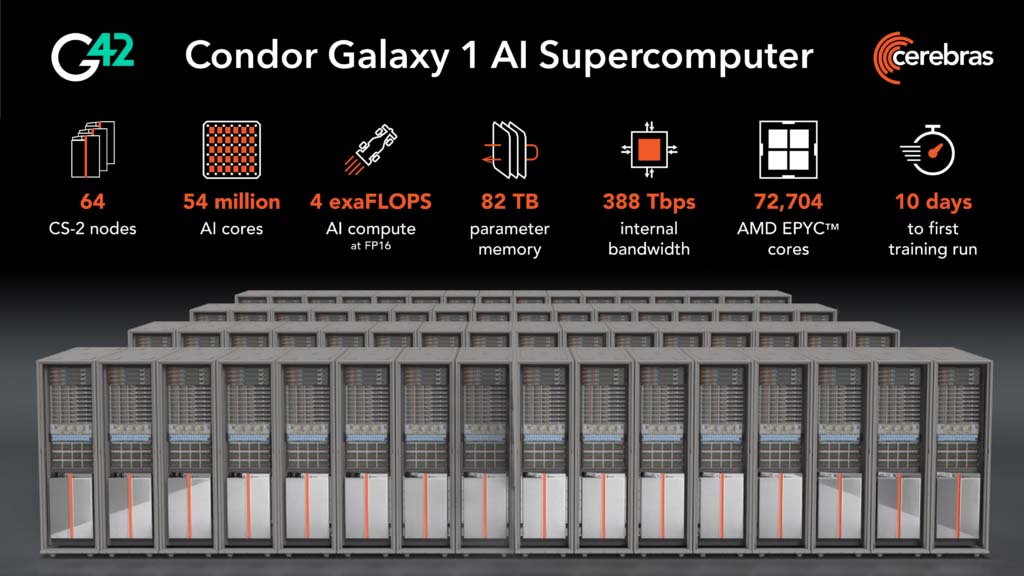

The first AI supercomputer on this network, Condor Galaxy 1 (CG-1), has 4 exaFLOPs and 54-million cores. Cerebras and G42 are planning to deploy two more such supercomputers, CG-2 and CG-3, in the US in early 2024.

With a planned capacity of 36 exaFLOPs in total, this supercomputing network is expected to advance the use of AI globally.

“Collaborating with Cerebras to rapidly deliver the world’s fastest AI training supercomputer and laying the foundation for interconnecting a constellation of these supercomputers across the world has been enormously exciting,” Talal Alkaissi, CEO of G42 Cloud. “This partnership brings together Cerebras’ extraordinary compute capabilities, together with G42’s multi-industry AI expertise. G42 and Cerebras’ shared vision is that Condor Galaxy will be used to address society’s most pressing challenges across healthcare, energy, climate action and more.”

Located in Santa Clara, California, CG-1 links 64 Cerebras CS-2 systems together into a single, easy-to-use AI supercomputer, with an AI training capacity of 4 exaFLOPs.

Cerebras and G42 offer CG-1 as a cloud service, allowing customers to enjoy the performance of an AI supercomputer without having to manage or distribute models over physical systems.

“Delivering 4 exaFLOPs of AI compute at FP 16, CG-1 dramatically reduces AI training timelines while eliminating the pain of distributed compute,” says Andrew Feldman, CEO of Cerebras Systems. “Many cloud companies have announced massive GPU clusters that cost billions of dollars to build, but that are extremely difficult to use.

“Distributing a single model over thousands of tiny GPUs takes months of time from dozens of people with rare expertise. CG-1 eliminates this challenge. Setting up a generative AI model takes minutes, not months and can be done by a single person.

“CG-1 is the first of three 4 exaFLOP AI supercomputers to be deployed across the U.S. Over the next year, together with G42, we plan to expand this deployment and stand up a staggering 36 exaFLOPs of efficient, purpose-built AI compute.”

About Condor Galaxy 1 (CG-1)

Optimised for Large Language Models and Generative AI, CG-1 delivers 4 exaFLOPs of 16-bit AI compute, with standard support for up to 600-billion parameter models and extendable configurations that support up to 100-trillion parameter models.

With 54-million AI-optimized compute cores, 388 terabits per second of fabric bandwidth, and fed by 72 704 AMD EPYC processor cores, unlike any known GPU cluster, CG-1 delivers near-linear performance scaling from one to 64 CS-2 systems using simple data parallelism.

“AMD is committed to accelerating AI with cutting edge high-performance computing processors and adaptive computing products as well as through collaborations with innovative companies like Cerebras that share our vision of pervasive AI,” says Forrest Norrod, executive vice-president and GM: data centre solutions business group at AMD. “Driven by more than 70,000 AMD EPYC processor cores, Cerebras’ Condor Galaxy 1 will make accessible vast computational resources for researchers and enterprises as they push AI forward.”

CG-1 offers native support for training with long sequence lengths, up to 50,000 tokens out of the box, without any special software libraries. Programing CG-1 is done entirely without complex distributed programming languages, meaning even the largest models can be run without weeks or months spent distributing work over thousands of GPUs.

Rendering of the complete Condor Galaxy 1 AI Supercomputer, which features an impressive 54-million cores across 64 CS-2 nodes, supported by over 72 000 AMD EPYC cores for a total of 4 exaFLOPs of AI compute at FP-16.

Photo credit: Rebecca Lewington/Cerebras Systems

CG-1 is the first of three 4 exaFLOP AI supercomputers (CG-1, CG-2, and CG-3), built and located in the U.S. by Cerebras and G42 in partnership. These three AI supercomputers will be interconnected in a 12 exaFLOP, 162-million core distributed AI supercomputer consisting of 192 Cerebras CS-2s and fed by more than 218 000 high performance AMD EPYC CPU cores. G42 and Cerebras plan to bring online six additional Condor Galaxy supercomputers in 2024, bringing the total compute power to 36 exaFLOPs.