Researchers are taking deep learning for a deep dive. Literally.

The Woods Hole Oceanographic Institution (WHOI) Autonomous Robotics and Perception Laboratory (WARPLab) and MIT are developing a robot for studying coral reefs and their ecosystems.

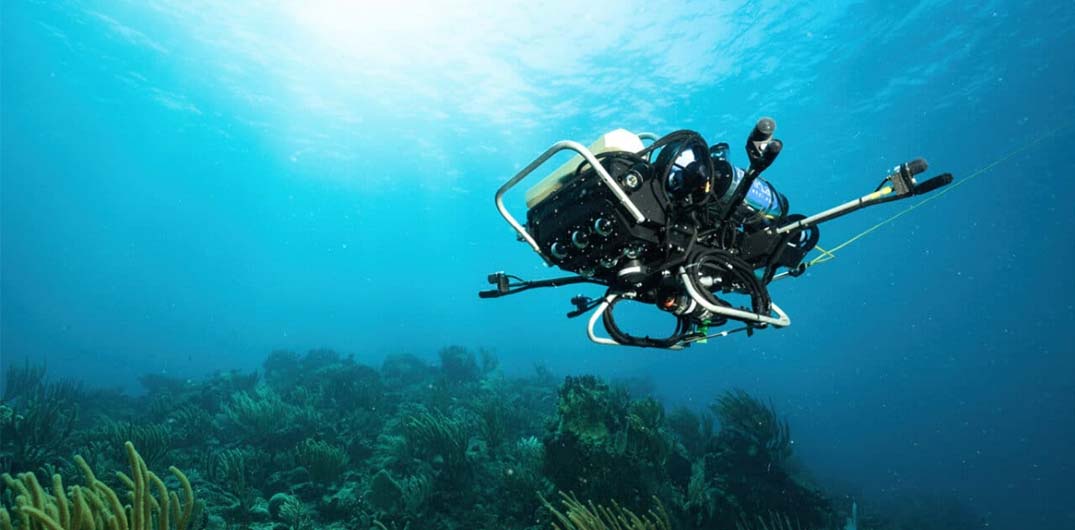

The WARPLab autonomous underwater vehicle (AUV), enabled by an Nvidia Jetson Orin NX module, is an effort from the world’s largest private ocean research institution to turn the tide on reef declines.

Around 25% of coral reefs worldwide have vanished in the past three decades, and most of the remaining reefs are heading for extinction, according to the WHOI Reef Solutions Initiative.

The AUV, dubbed CUREE (Curious Underwater Robot for Ecosystem Exploration), gathers visual, audio, and other environmental data alongside divers to help understand the human impact on reefs and the sea life around them. The robot runs an expanding collection of Nvidia Jetson-enabled edge AI to build 3D models of reefs and to track creatures and plant life. It also runs models to navigate and collect data autonomously.

WHOI, whose submarine first explored the Titanic in 1986, is developing its CUREE robot for data gathering to scale the effort and aid in mitigation strategies. The oceanic research organisation is also exploring the use of simulation and digital twins to better replicate reef conditions and investigate solutions like Nvidia Omniverse, a development platform for building and connecting 3D tools and applications.

Creating a digital twin of Earth in Omniverse, Nvidia is developing the world’s most powerful AI supercomputer for predicting climate change, called Earth-2.

Underwater AI: DeepSeeColour model

Anyone who’s gone snorkeling knows that seeing underwater isn’t as clear as seeing on land. Over distance, water attenuates the visible spectrum of light from the sun underwater, muting some colours more than others. At the same time, particles in the water create a hazy view, known as backscatter.

A team from WARPLab recently published a research paper on undersea vision correction that helps mitigate these problems and supports the work of CUREE. The paper describes a model, called DeepSeeColour, that uses a sequence of two convolutional neural networks to reduce backscatter and correct colours in real time on the Nvidia Jetson Orin NX while undersea.

“Nvidia GPUs are involved in a large portion of our pipeline because, basically, when the images come in, we use DeepSeeColor to color correct them, and then we can do the fish detection and transmit that to a scientist up at the surface on a boat,” says Stewart Jamieson, a robotics PhD candidate at MIT and AI developer at WARPLab.

Eyes and ears: fish and reef detection

CUREE packs four forward-facing cameras, four hydrophones for underwater audio capture, depth sensors and inertial measurement unit sensors. GPS doesn’t work underwater, so it is only used to initialize the robot’s starting position while on the surface.

Using a combination of cameras and hydrophones along with AI models running on the Jetson Orin NX enables CUREE to collect data for producing 3D models of reefs and undersea terrains.

To use the hydrophones for audio data collection, CUREE needs to drift with its motor off so that there’s no interference with the audio.

“It can build a spatial soundscape map of the reef, using sounds produced by different animals,” says Yogesh Girdhar, an associate scientist at WHOI, who leads WARPLab. “We currently (in post-processing) detect where all the chatter associated with bioactivity hotspots is,” he adds, referring to all the noises of sea life.

The team has been training detection models for both audio and video input to track creatures. But a big noise interference with detecting clear audio samples has come from one creature in particular.

“The problem is that, underwater, the snapping shrimps are loud,” said Girdhar. On land, this classic dilemma of how to separate sounds from background noises is known as the cocktail party problem. “If only we could figure out an algorithm to remove the effects of sounds of snapping shrimps from audio, but at the moment we don’t have a good solution,” he says.

Despite few underwater datasets in existence, pioneering fish detection and tracking is going well, said Levi Cai, a PhD candidate in the MIT-WHOI joint program. He says they’re taking a semi-supervised approach to the marine animal tracking problem. The tracking is initialised using targets detected by a fish detection neural network trained on open-source datasets for fish detection, which is fine-tuned with transfer learning from images gathered by CUREE.

“We manually drive the vehicle until we see an animal that we want to track, and then we click on it and have the semi-supervised tracker take over from there,” says Cai.

Jetson Orin energy efficiency drives CUREE

Energy efficiency is critical for small AUVs like CUREE. The compute requirements for data collection consume roughly 25% of the available energy resources, with driving the robots taking the remainder.

CUREE typically operates for as long as two hours on a charge, depending on the reef mission and the observation requirements, says Girdhar, who goes on the dive missions in St John in the US Virgin Islands.

To enhance energy efficiency, the team is looking into AI for managing the sensors so that computing resources automatically stay awake while making observations and sleep when not in use.

“Our robot is small, so the amount of energy spent on GPU computing actually matters — with Jetson Orin NX our power issues are gone, and it’s made our system much more robust,” says Girdhar.

Exploring Isaac Sim to make improvements

The WARPLab team is experimenting with NVIDIA Isaac Sim, a scalable robotics simulation application and synthetic data generation tool powered by Omniverse, to accelerate development of autonomy and observation for CUREE.

The goal is to do simple simulations in Isaac Sim to get the core essence of the problem to be simulated and then finish the training in the real world undersea, says Yogesh.

“In a coral reef environment, we cannot depend on sonars — we need to get up really close,” he says. “Our goal is to observe different ecosystems and processes happening.”

Understanding ecosystems and creating mitigation strategies

The WARPLab team intends to make the CUREE platform available for others to understand the impact humans are having on undersea environments and to help create mitigation strategies.

The researchers plan to learn from patterns that emerge from the data. CUREE provides an almost fully autonomous data collection scientist that can communicate findings to human researchers, says Jamieson. “A scientist gets way more out of this than if the task had to be done manually, driving it around staring at a screen all day.”

Girdhar says ecosystems like coral reefs can be modeled with a network, with different nodes corresponding to different types of species and habitat types. Within that, he said, there are all these different interactions happening, and the researchers seek to understand this network to learn about the relationship between various animals and their habitats.

The hope is that there’s enough data collected using CUREE AUVs to gain a comprehensive understanding of ecosystems and how they might progress over time and be affected by harbors, pesticide runoff, carbon emissions and dive tourism.

“We can then better design and deploy interventions and determine, for example, if we planted new corals how they would change the reef over time,” says Girdar.