Intel has unveiled an array of technologies aimed at bringing artificial intelligence (AI) everywhere and making it more accessible across all workloads, from client and edge to network and cloud.

“AI represents a generational shift, giving rise to a new era of global expansion where computing is even more foundational to a better future for all,” says Intel CEO Pat Gelsinger, speaking to delegates at the Intel Innovation event. “For developers, this creates massive societal and business opportunities to push the boundaries of what’s possible, to create solutions to the world’s biggest challenges and to improve the life of every person on the planet.”

Gelsinger showed how Intel is bringing AI capabilities across its hardware products and making it accessible through open, multi-architecture software solutions. He also highlights how AI is helping to drive the “siliconomy”, a “growing economy enabled by the magic of silicon and software”.

Today, silicon feeds a $574-billion industry that in turn powers a global tech economy worth almost $8-trillion.

Silicon, packaging and multi-chiplet solutions

The work begins with silicon innovation. Intel’s five-nodes-in-four-years process development program is progressing well, Gelsinger says, with Intel 7 already in high-volume manufacturing, Intel 4 manufacturing-ready and Intel 3 on track for the end of this year.

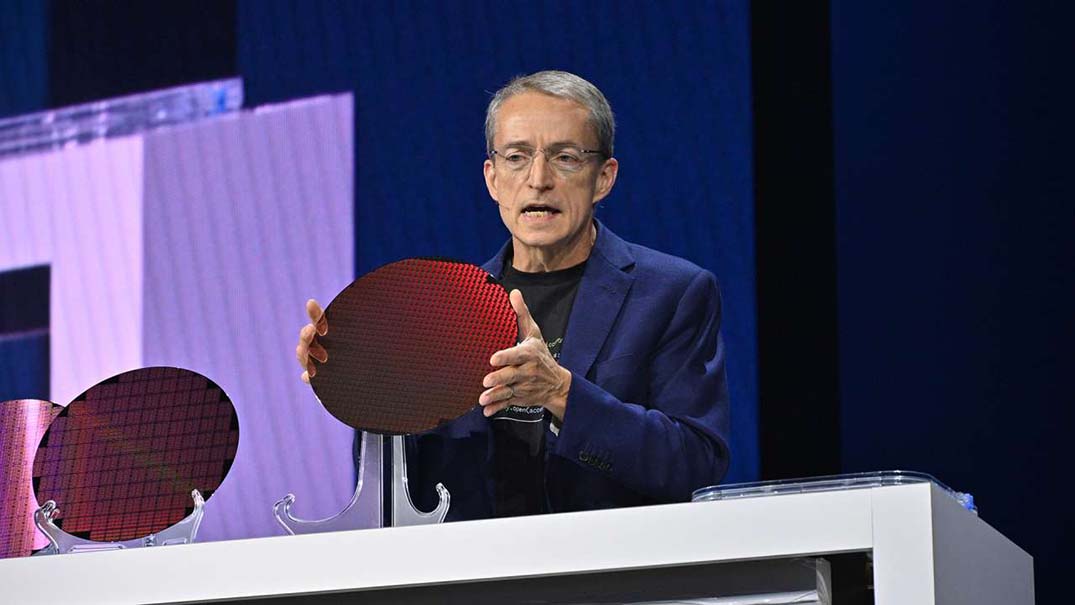

Gelsinger showed an Intel 20A wafer with the first test chips for Intel’s Arrow Lake processor, which is destined for the client computing market in 2024. Intel 20A will be the first process node to include PowerVia, Intel’s backside power delivery technology, and the new gate-all-around transistor design called RibbonFET. Intel 18A, which also leverages PowerVia and RibbonFET, remains on track to be manufacturing-ready in the second half of 2024.

Intel CEO Pat Gelsinger displays an Intel 20A wafer with the first test chips for Intel’s Arrow Lake processor, which is destined for the client computing market in 2024.

Credit: Intel Corporation

Another way Intel presses Moore’s Law forward is with new materials and new packaging technologies, like glass substrates – a breakthrough Intel announced this week. When introduced later this decade, glass substrates will allow for continued scaling of transistors on a package to help meet the need for data-intensive, high-performance workloads like AI and will keep Moore’s Law going well beyond 2030.

Intel also displayed a test chip package built with Universal Chiplet Interconnect Express (UCIe). The next wave of Moore’s Law will arrive with multi-chiplet packages, Gelsinger said, coming sooner if open standards can reduce the friction of integrating IP. Formed last year, the UCIe standard will allow chiplets from different vendors to work together, enabling new designs for the expansion of diverse AI workloads. The open specification is supported by more than 120 companies.

The test chip combined an Intel UCIe IP chiplet fabricated on Intel 3 and a Synopsys UCIe IP chiplet fabricated on TSMC N3E process node. The chiplets are connected using embedded multi-die interconnect bridge (EMIB) advanced packaging technology. The demonstration highlights the commitment of TSMC, Synopsys and Intel Foundry Services to support an open standard-based chiplet ecosystem with UCIe.

Increasing performance and expanding AI everywhere

Gelsinger spotlighted the range of AI technology available to developers across Intel platforms today – and how that range will dramatically increase over the coming year.

Recent MLPerf AI inference performance results further reinforce Intel’s commitment to addressing every phase of the AI continuum, including the largest, most challenging generative AI and large language models. The results also spotlight the Intel Gaudi2 accelerator as the only viable alternative on the market for AI compute needs.

Gelsinger announced a large AI supercomputer will be built entirely on Intel Xeon processors and 4,000 Intel Gaudi2 AI hardware accelerators, with Stability AI as the anchor customer.

Intel also previewed the next generation of Intel Xeon processors, revealing that 5th Gen Intel Xeon processors will bring a combination of performance improvements and faster memory, while using the same amount of power, to the world’s data centres when they launch on 14 December.

Sierra Forest, with E-core efficiency and arriving in the first half of 2024, will deliver 2,5x better rack density and 2,4x higher performance per watt over 4th Gen Xeon and will include a version with 288 cores2. And Granite Rapids, with P-core performance, will closely follow the launch of Sierra Forest, offering 2x to 3x better AI performance compared to 4th Gen Xeon.

Looking ahead to 2025, the next-gen E-core Xeon, code-named Clearwater Forest, will arrive on the Intel 18A process node.

AI PC with Intel Core Ultra processors

AI is about to get more personal, too. “AI will fundamentally transform, reshape and restructure the PC experience – unleashing personal productivity and creativity through the power of the cloud and PC working together,” Gelsinger says. “We are ushering in a new age of the AI PC.”

The new PC experience arrives with the upcoming Intel Core Ultra processors, code-named Meteor Lake, featuring Intel’s first integrated neural processing unit, or NPU, for power-efficient AI acceleration and local inference on the PC. Gelsinger confirmed Core Ultra also will launch on 14 December.

Core Ultra represents an inflection point in Intel’s client processor roadmap: it’s the first client chiplet design enabled by Foveros packaging technology. In addition to the NPU and major advances in power-efficient performance thanks to Intel 4 process technology, the new processor brings discrete-level graphics performance with onboard Intel Arc graphics.

Developers in the siliconomy driver’s seat

“AI going forward must deliver more access, scalability, visibility, transparency and trust to the whole ecosystem,” Gelsinger says.

To help developers unlock this future, Intel has announced:

- General availability of the Intel Developer Cloud: The Intel Developer Cloud helps developers accelerate AI using the latest Intel hardware and software innovations – including Intel Gaudi2 processors for deep learning – and provides access to the latest Intel hardware platforms, such as the 5th Gen Intel Xeon Scalable processors and Intel Data Centre GPU Max Series 1100 and 1550. When using the Intel Developer Cloud, developers can build, test and optimize AI and HPC applications. They can also run small- to large-scale AI training, model optimization and inference workloads that deploy with performance and efficiency. Intel Developer Cloud is based on an open software foundation with oneAPI – an open multiarchitecture, multivendor programming model – to provide hardware choice and freedom from proprietary programming models to support accelerated computing and code reuse and portability.

- The 2023.1 release of the Intel Distribution of OpenVINO toolkit: OpenVINO is Intel’s AI inferencing and deployment runtime of choice for developers on client and edge platforms. The release includes pre-trained models optimized for integration across operating systems and different cloud solutions, including many generative AI models, such as the Llama 2 model from Meta. On stage, companies including ai.io and Fit:match demonstrated how they use OpenVINO to accelerate their applications: ai.io to evaluate the performance of any potential athlete; Fit:match to revolutionize the retail and wellness industries to help consumers find the best-fitting garments.

- Project Strata, and the development of an edge-native software platform: The platform launches in 2024 with modular building blocks, premium service and support offerings. It is a horizontal approach to scaling the needed infrastructure for the intelligent edge and hybrid AI and will bring together an ecosystem of Intel and third-party vertical applications. The solution will enable developers to build, deploy, run, manage, connect and secure distributed edge infrastructure and applications.

Featured picture: Intel CEO Pat Gelsinger introduces the “Siliconomy” at Intel Innovation.

Credit: Intel Corporation