AI and generative AI (GenAI) are driving rapid increases in electricity consumption, with data centre forecasts over the next two years reaching as high as 160% growth, according to Gartner.

As a result, Gartner predicts 40% of existing AI data centres will be operationally constrained by power availability by 2027.

“The explosive growth of new hyperscale data centers to implement GenAI is creating an insatiable demand for power that will exceed the ability of utility providers to expand their capacity fast enough,” says Bob Johnson, vice-president analyst at Gartner.

“In turn, this threatens to disrupt energy availability and lead to shortages, which will limit the growth of new data centeres for GenAI and other uses from 2026.”

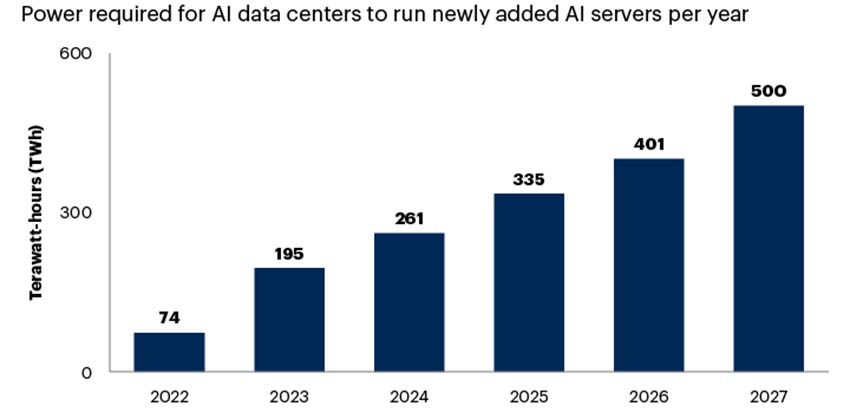

Gartner estimates the power required for data centres to run incremental AI-optimized servers will reach 500 terawatt-hours (TWh) per year in 2027, which is 2,6-times the level in 2023.

Source: Gartner (November 2024)

“New larger data centers are being planned to handle the huge amounts of data needed to train and implement the rapidly expanding large language models (LLMs) that underpin GenAI applications,” says Johnson. “However, short-term power shortages are likely to continue for years as new power transmission, distribution and generation capacity could take years to come online and won’t alleviate current problems.”

In the near future, the number of new data centres and the growth of GenAI will be governed by the availability of power to run them.

Gartner recommends organisations determine the risks potential power shortages will have on all products and services.

Electricity prices will increase

The inevitable result of impending power shortages is an increase in the price of power, which will also increase the costs of operating LLMs, according to Gartner.

“Significant power users are working with major producers to secure long-term guaranteed sources of power independent of other grid demands,” says Johnson. “In the meantime, the cost of power to operate data centers will increase significantly as operators use economic leverage to secure needed power. These costs will be passed on to AI/GenAI product and service providers as well.”

Gartner recommends that organisations evaluate future plans anticipating higher power costs and negotiate long-term contracts for data center services at reasonable rates for power.

They should also factor significant cost increases when developing plans for new products and services, while looking for alternative approaches that require less power.

Sustainability goals will suffer

Zero-carbon sustainability goals will also be negatively affected by short-term solutions to provide more power, as surging demand is forcing suppliers to increase production by any means possible.

In some cases, this means keeping fossil fuel plants that had been scheduled for retirement in operation beyond their scheduled shutdown.

“The reality is that increased data center use will lead to increased CO2 emissions to generate the needed power in the short-term,” says Johnson. “This, in turn, will make it more difficult for data center operators and their customers to meet aggressive sustainability goals relating to CO2 emissions.”

Data centres require 24/7 power availability, which renewable power such as wind or solar cannot provide without some form of alternative supply during periods when not generating power, according to Gartner. Reliable 24/7 power can only be generated by either hydroelectric, fossil fuel or nuclear power plants.

In the long-term, new technologies for improved battery storage (like sodium ion batteries) or clean power (such as small nuclear reactors) will become available and help achieve sustainability goals.

Gartner recommends organisations re-evaluate sustainability goals relating to CO2 emissions in light of future data centre requirements and power sources for the next few years.

When developing GenAI applications, they should focus on using a minimum amount of computing power and look at the viability of other options such as edge computing and smaller language models.